Trust and UI Design: The Heart of Human-AI Teaming

Joseph Mariña Artificial Intelligence, Human Centered Design, Emerging TechnologiesAs AI increasingly integrates into our daily routines, it does not just automate routine tasks; it collaborates with humans for decision-making and creative activities. Two vital factors underpin the success of human-AI partnerships: trust and user interface (UI) design. This blog explores how to build trust in human-AI teams and considerations for the corresponding interfaces.

Variety in Human-AI Partnerships

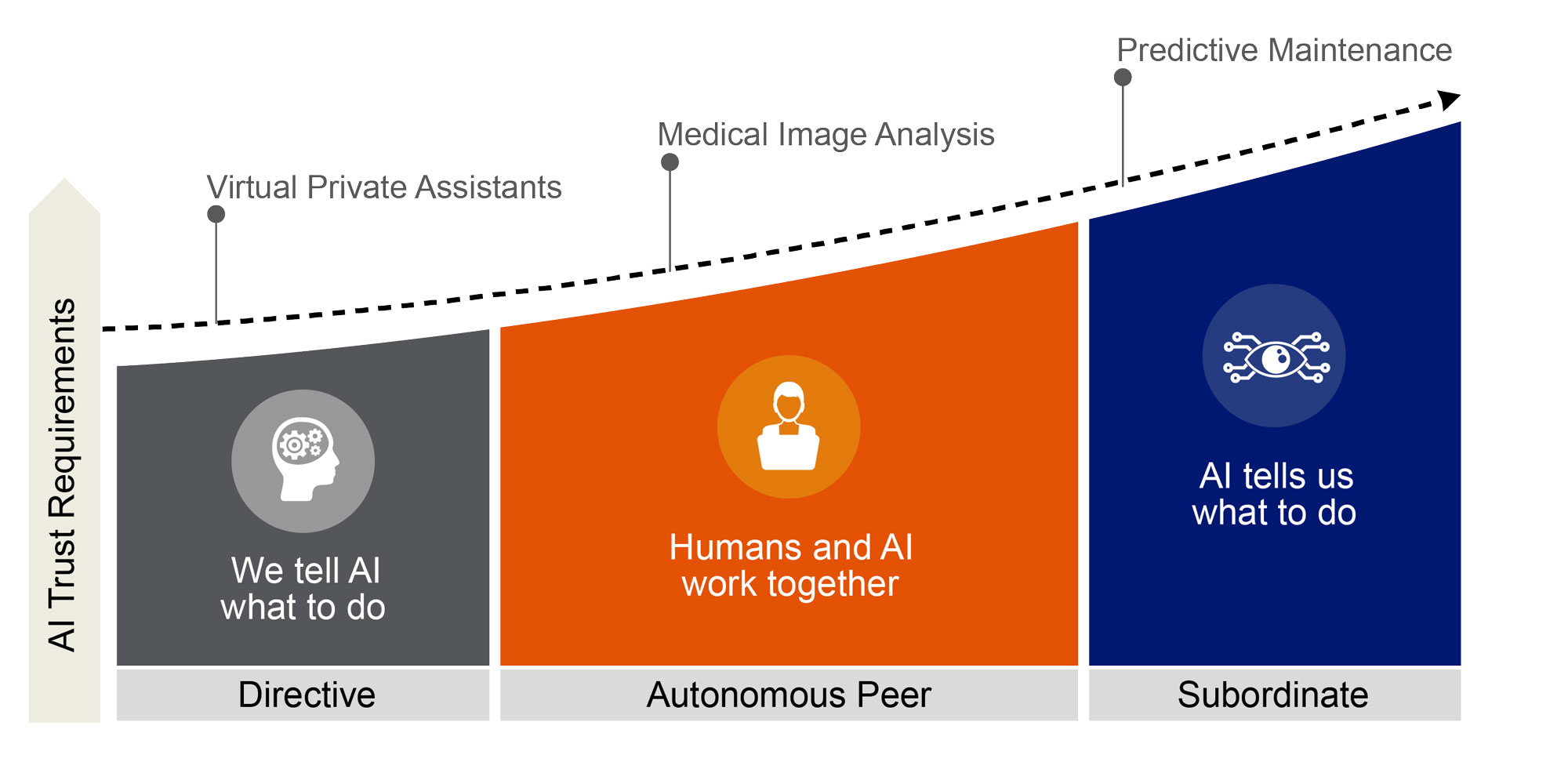

Human and AI collaboration takes different forms, ranging from humans giving AI explicit commands to AI taking the lead. The requirement for trust in this partnership varies (Figure 1) based on AI’s role. When AI handles mundane tasks, such as acting as a virtual private assistant (VPA), trust comes easily. However, as we give more control to AI, trust becomes more fragile. The lack of transparency and explainability from AI becomes a hurdle, especially when disagreements arise between humans and AI.

Figure 1: Human-AI partnerships form differently across this spectrum.

Building Trust with AI

As AI's role expands, organizations seek to foster trust in human-AI collaborations to enable AI's success in tasks that demand complex decision-making. Surprisingly, AI interactions may be able to apply trust-building techniques that work among humans. Simple practices, like repeating a user's name and reciprocating actions, enhance the likability and trustworthiness of AI. When designing human-AI interfaces, organizations build on these principles to create more trustworthy AI interactions.

The Power of Transparency

Transparency is a key element in building trust with AI. VPAs, like Alexa or Siri, offer clear prompts and acknowledge their own limitations by encouraging users to ask them what they can do. Similarly, a practice called an “accusation audit” can help manage expectations around interactions with AI systems by having AI call out what it can’t do well. This transparency sets proper expectations and helps in forming trust. For more complex AI systems, emulating these transparency principles and admitting their limitations in certain contexts fosters trust.

Trust Is a Two-Way Street

As AI takes on more complex tasks, trust should be considered bidirectional. AI relies on humans as powerful sensors for not easily quantifiable nuances, context, and subjective measurements. Humans serve as contextual drift detectors in human-AI collaborations, sensing changes in context and society that AI can miss. This two-way trust is crucial for a successful partnership.

Users must accurately describe scenarios and needs without introducing bias or censoring. The trustworthiness of a human teammate’s input affects outcomes. Human-AI teams benefit from sensors and user data that guides AI’s confidence in the information provided by human teammates.

User-Centered Design for Human-AI Applications

Incorporating adoption management into UI design will also play a role in the success of human-AI applications. Cognitive dissonance, where users double down on suboptimal decisions, must be considered. Expanding human-centered design (HCD) principles to include AI interactions helps build trust between humans and AI. Interfaces and designs should consider AI as a user and modify interfaces and data input for both human and computer readability.

Human-AI Teaming Lessons Learned

LMI and its technology accelerator, LMI Forge, have begun exploring the effects of shifting increasing responsibility to AI across the human-AI spectrum through VPAs and proof of concepts that explore a peer-level approach.

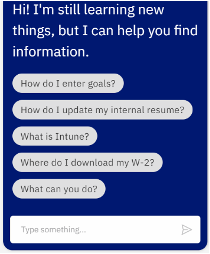

LMI implemented a VPA using IBM Watson Assistant to help employees find answers to common questions. Likely due to the rapid developments in AI, inputs from users varied widely, with some trying to use it as a keyword search while others expected it to work like generative AI. Adding conversation starters (Figure 2) and accusation audits in the greeting helped generate contingent trust, letting users know what type of questions to ask the VPA. Guiding users to the right type of questions increased the VPA’s effectiveness.

Figure 2: Conversation starters help create contingent trust.

Another example is Vitality Hive, a proof of concept developed by LMI Forge to harmonize data from diverse sources, including electronic health records, wearables, and dietary logs to equip individuals with tailored wellness insights and notes. These insights could not only empower informed decision-making but foster productive dialogues between patients and healthcare providers and enable providers to deploy resources for maximal impact. Designing this interface required transparency around data use and sharing to gain cooperation from users. In designing Vitality Hive, as well as other proof of concepts with Forge™, we noted that many human-AI interfaces start becoming buttonless, with less need for users to navigate through a flow. Instead, users express a goal and AI surfaces the information they need.

Joseph Mariña

Fellow, Agile Transformation CoachJoseph Mariña has experience as a scrum master and agile coach across many agile frameworks. He has more than ten years of experience working on an array of technical solutions.